It feels like everyone is talking about AI lately. Whether it’s ChatGPT writing ads in the voice of Ryan Reynolds or Lensa creating portraits of your friends on social media, generative AI is getting a lot of attention. And a lot of people are speculating that AI will simplify a lot of tasks—including marketing tasks.

So, does marketing AI deserve the hype? The answer to that question isn’t simple, but it is worth talking about.

Artificial intelligence has the potential to be game-changing for companies, digital marketers, and everyone in between. But it is a developing field, and you need to understand both its potential and its current limitations.

Artificial intelligence and machine learning 101

Most artificial intelligence (AI) models we’re familiar with are grounded in machine learning. Machine learning uses data and algorithms, imitating the way that we (humans) learn.

Data scientists feed machine-learning algorithms massive sets of data. Sometimes, this learning is “supervised,” which means that humans are curating the data and labelling the inputs and outputs. Other times, the algorithms’ learning is unsupervised, meaning the AI is left to its own devices—looking for patterns in unlabeled data.

Regardless, the algorithms will eventually pick up on patterns and trends in their datasets and will predict or analyze additional data based on these patterns.

Once trained, these systems have remarkable power, and engineers can use machine learning for many tasks: recognizing that you’re running out of milk in your refrigerator; answering your questions via a smart assistant (like Siri or Alexa); operating an autonomous vehicle; identifying hard-to-identify risk factors in a patient’s medical history.

What is generative AI?

Generative AI takes machine learning to new places. It is a type of artificial intelligence that can build content, whether that’s copy, an image, code, or a video, based on prompts or queries. ChatGPT, DALL-E, JasperAI, CopyAI, and Lensa are all forms of generative AI.

Generative AI models are trained using massive datasets containing existing webpages, images, and other creative content. When users ask the algorithm to create something, they rely on this knowledge base for guidance.

For most generative AI models, their data set is (nearly) the entire internet.

People vs. machines: A case study

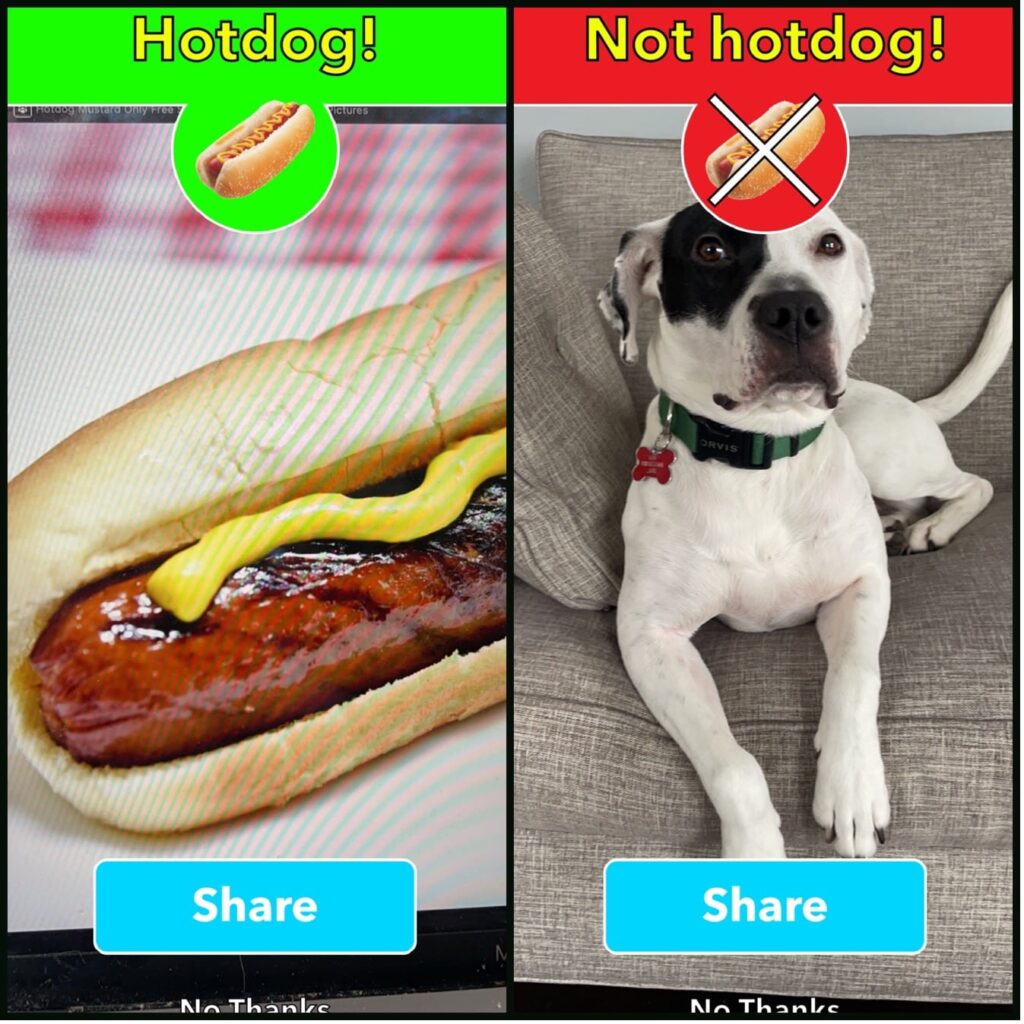

Humble brag: My now-husband was part of a computer science research team in college that taught robots how to mimic human vision, using artificial intelligence. His research helped pave the way for some of the AI-powered technology we use today.

But even though I’ve been hearing about machine learning, neural networks, and other nerdy stuff for decades, I still had a fuzzy understanding of how AI and machine learning work. So, I texted the expert.

- Me: “How would you explain machine learning to someone who is relatively non-technical?”

- The engineer: “It is software that can learn a task by training instead of coding. The easiest example is image recognition. Feed the software many images that are labeled (like food), and then give it a random image to see if it applies the right label based on the patterns it was trained to see.”

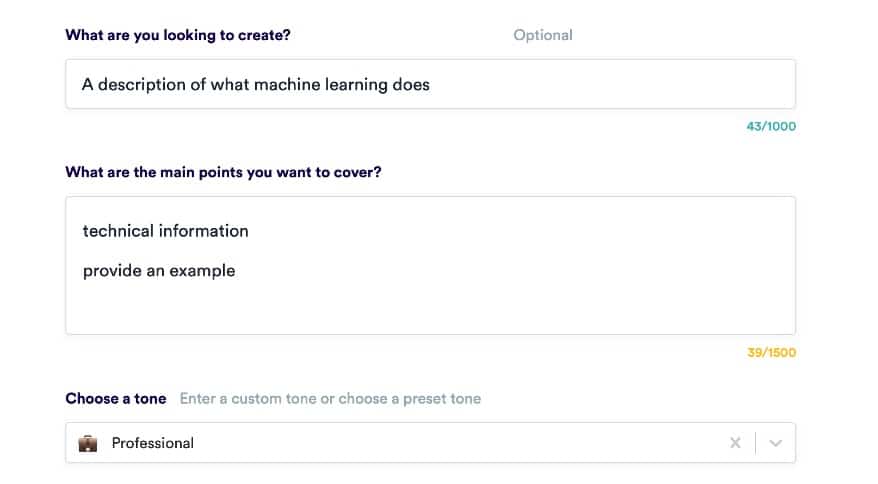

I liked his answer—it was in plain language and offered a real-life example. But I wondered if a generative AI model could do it better. To see what would happen, I posed a similar question to CopyAI’s Freestyle tool. I gave the chatbot just a little more information than I gave my husband—but I intentionally kept my request vague.

- Me:

- CopyAI: “Machine learning is a subset of artificial intelligence that involves teaching computers to learn from data. It’s used in many applications, but the most common are in speech recognition and image classification.

Machine learning algorithms are designed to make predictions based on existing data sets—for example, a program that can tell you what a picture looks like just by analyzing it (and not necessarily by knowing anything about photography). Machine learning algorithms can be “trained” on huge amounts of data to help them predict what will happen in future cases. This is why machine learning has become so popular and useful: it’s able to analyze huge amounts of information and make decisions based on what it learns from that data, which can then be applied elsewhere as well.”

CopyAI did a good job, but the engineer knew how to play to his audience with the image of a cute dog and a Silicon Valley reference. His answer was human, the chatbot’s was not.

Artificial intelligence’s limitations

AI is imperfect in many ways. Before you embrace marketing AI, it’s imperative that you understand its shortcomings.

Generative AI is not human-centered, and might not rank well on search

In 2022, Google announced a series of Helpful Content Updates, which have been rolled together into a new Helpful Content System. The search engine made several things very clear:

- It values human-centered content that answers real people’s questions with high-quality information.

- It does not want to see content that is “search-first,” or written solely to make the algorithms happy.

- Publishing spammy, unedited AI copy will rarely rank for high-value keywords.

Google did not say that all AI-written content will hurt your website. But the content must be useful and human centered.

Takeaway: While you can give marketing AI a lot of direction, it’s only mimicking human emotions and experiences. It’s also been trained by the volumes of search-first copy on the internet. Unless you’re supplementing your marketing AI with your own insights, expertise, stories, and personality, it might underperform.

Misinformation and discriminatory tendencies

The fact that generative AI models are essentially raised by the internet is a blessing and a curse. They base their answers on existing content that can reflect the best and the worst in humanity, and the bots don’t have the ability to identify potential hate speech or bias yet.

In 2017, researchers noted that by simply crawling the internet, AI developed implicit racism and sexism. For this reason, many early AI tools earned a dubious reputation. For example, Google image recognition software infamously tagged a series of images of black men as “gorillas.”

Along with that, chatbots can also perpetuate misinformation.

Here’s a more personal example of misinformation and bad advice. Last year, I was testing a generative AI model. I asked it to create a blog about drunk driving during the holidays. It offered up this advice:

“When you’re out and about, make sure that your kids are buckled up in the back seat. If there is an adult who has been drinking in the front seat, it’s best if they’re not holding your child (or anyone else’s).”

While this is true, I would hope that it goes without saying. The same platform also suggested I load my vehicle with heating fuel if I was worried that I might ditch my vehicle while driving home from a party.

Then, I asked the platform’s chatbot some FAQ-style questions about Michigan no-fault law. Some of them were fairly accurate. But I also got this one.

- Me: “How long can I receive no-fault benefits in Michigan?”

- Chatbot: “In Michigan, no-fault benefits are lifetime benefits. This means that a person can receive coverage and compensation for as long as they have a valid policy and their injury or illness meets the criteria.”

This is not an accurate statement of the law. Even if the chatbot was trained using Michigan Auto Law’s impressive library of attorney-written content, the bot still couldn’t articulate the nuances of Michigan’s complicated (and evolving) rules. It also could not accurately answer FAQ-style questions about both long-term disability insurance and Social Security disability.

Takeaway: Again, fact check everything that AI writes. It cannot replace or replicate your subject matter expertise.

Intellectual property issues

Developers and artists have already filed lawsuits against generative AI companies, arguing that they are improperly using their intellectual property to train algorithms. And writers have discovered AI plagiarizing their work.

CNET, the tech website, is testing generative AI as a means of writing draft articles. After someone called out an AI-written story’s factual errors, the company did a full audit of its AI-generated content. This audit uncovered plagiarism, including full sentences that “closely resembled the original language.”

Takeaway: You should proceed with caution and use plagiarism tools when relying on marketing AI.

RELATED: How Our Editorial Process Creates Outstanding Website Content

Marketing AI is a tool, not a holistic solution

There will probably be a day when AI can seamlessly drive our cars and write consistently good website copy. We’re not there yet (emphasis on yet). So, please do not simply copy and paste a ChatGPT query onto your blog. You need to vet the copy, check its accuracy, and add your expertise.

Marketing AI is a tool in your toolbox, and can help reduce the amount of time you spend on routine marketing tasks. Here’s a quick rundown of when you should (and should not) use marketing AI.

| Use marketing AI when: | Rely on humans when: |

| Writing social media posts | Crafting goal-driven marketing strategies and campaigns |

| Building advertising copy | Defining a brand identity or narrative |

| Brainstorming taglines | Creating subject matter expert and research-driven content |

| Populating an FAQ section | Explaining new or rapidly evolving topics |

| Outlining a blog’s structure | Telling your clients’ stories and building social proof |

| Creating automated emails, like the “thank you” messages you get after completing a form | Editing and fact-checking |

RELATED: Law firm marketing strategies: What they are and why they matter

LaFleur: Data-driven marketing insights and solutions

LaFleur is more than a marketing agency—we’re a data-driven partner that helps firms and companies grow and evolve. Our team uses technology, creativity, and our expertise to build human-centered marketing strategies. If you’d like to learn more about how we can improve your brand’s reach and influence, we’d love to hear from you.

You can connect with our team by completing our simple online form.

References

Caliskan, A., Bryson, J., Narayanan, A. (2017, April 14). Semantics derived automatically from language corpora contain human-like biases. Science. Retrieved from https://www.science.org/doi/10.1126/science.aal4230

Gugiliemo, C. (2023, January 25). CNET Is Testing an AI Engine. Here’s What We’ve Learned, Mistakes and All. CNET. Retrieved from https://www.cnet.com/tech/cnet-is-testing-an-ai-engine-heres-what-weve-learned-mistakes-and-all/

Harrison, M. (2023, January 14). Viral AI-Written Article Busted as Plagiarized. Futurism. Retrieved from https://futurism.com/ai-written-article-plagiarized

Settee, R. (2023, January 20). First AI Art Generator Lawsuits Threaten Future of Emerging Tech. Bloomberg Law. Retrieved from https://news.bloomberglaw.com/ip-law/first-ai-art-generator-lawsuits-threaten-future-of-emerging-tech

Vincent, J. (2018, January 12). Google ‘fixed’ its racist algorithm by removing gorillas from its image-labeling tech. The Verge. Retrieved from https://www.theverge.com/2018/1/12/16882408/google-racist-gorillas-photo-recognition-algorithm-ai