Not all shiny new AI tools are built to serve your clients or your firm. Here’s how to tell who’s full of it.

There’s something oddly familiar about the new generation of legaltech and AI tools flooding your inbox. Maybe it’s the breathless pitches: “Revolutionize your practice.” “Automate your casework.” “Win more clients without lifting a finger.”

If you were in the legal world during the early 2000s, you might remember when SEO was sold with the same slick confidence. Tools and services with opaque promises, mysterious pricing models, and lots of technical mumbo-jumbo. Salespeople talked fast, showed you line graphs pointing up and to the right, and threw around terms like “link juice” and “pagerank sculpting.” It was hard to know who was legit—and who was just taking your firm’s money while you watched your rankings yo-yo.

AI is the new SEO. While some of it’s genuinely powerful, there’s a whole ecosystem of snake oil peddlers trying to cash in on the confusion. This is particularly true in the legal industry, where high-value transactions often meet a slower pace of tech adoption. It’s the perfect storm for bad actors.

But I don’t want to just issue a warning. I want to give your firm a playbook. I’ve helped firms recover from expensive vendor mistakes. And I’ve led teams that build and evaluate AI tools, so I’ve seen both the possibilities and the pitfalls. This guide is your filter: how to separate the useful from the useless, the future-forward from the flash-in-the-pan—and the real thing from the snake oil.

What makes a snake oil salesman?

The snake oil salesman isn’t always easy to spot—especially when they come wrapped in slick branding and Silicon Valley-speak. But there’s a pattern, and if you’ve been burned before (like with early SEO providers), you’ll recognize it.

In the SEO gold rush, vendors sold you a dream: Get to the top of Google and the clients will come. They promised results without explaining the strategy. They built backlinks in shady corners of the internet, stuffed keywords into your homepage footer, and sold you access to secret “insider” tools. If your rankings tanked after an algorithm update? Not their fault.

AI and legaltech snake oil looks a little different on the surface, but under the hood, it’s eerily similar.

- Techno-jargon. Just like early SEO vendors buried you in meaningless jargon, many AI vendors now flood pitches with undefined terms—“machine-learned insights,” “automated decisioning,” “predictive LLM tuning”—without ever explaining what the product actually does.

- Big promises. In SEO, they promised page-one rankings. Now, they promise quality legal blogs in seconds, perfect intake automation, or “smart assistants” that understand case law better than your paralegal.

- Fearmongering. SEO vendors used to say, “If you’re not on page one, you don’t exist.” Now it’s, “If you’re not using AI, you’ll be left behind.” It’s a tactic, not a truth.

- No accountability. Just like the shady SEO shops that ghosted you after the Penguin and Panda updates, many of today’s AI grifters often won’t be around in 12 months when your license renews or when regulators come knocking.

Real technology companies help you understand what you’re buying. They don’t rely on confusion to close the sale. They don’t treat your law firm like a startup MVP (minimal viable product) testing ground. And they don’t make you feel stupid for asking how it all works.

Five red flags to watch for when considering an AI or tech solution

They say “AI” a lot without sharing details

If the word “AI” appears more than once per sentence and never with a clear definition of what it actually does, that’s a problem. Are we talking about natural language processing? Predictive modeling? Machine learning trained on your firm’s own data?

If they can’t tell you, assume they don’t know or that it’s not really AI. Many tools labeled as “AI-powered” are really just glorified decision trees or simple automations. There’s nothing wrong with automation, but calling it AI implies learning, adaptation, or language generation that might not be there.

And even if it’s AI, you need to understand the model, what was in its dataset, and how much human review will be involved. If the model wasn’t trained on your firm’s data or data like it, it’s not going to perform like they claim without significant human intervention.

The demo glosses over risk, compliance, and privacy issues

AI that has access to sensitive information, whether it’s personal injury intakes, immigration case files, or estate planning documents, introduces serious operational and ethical risks. This includes tools that review client data, suggest legal strategies, generate filings, or even assist with intake qualification. At best, these tools might occasionally produce inaccurate or overly generic results. At worst, they might hallucinate case law, leak confidential data, or reinforce harmful biases in high-stakes matters.

If the salespeople hand-waves these risks away with vague reassurances like, “We’ve got guardrails in place,” it’s a red flag. They should be able to tell you what those guardrails are, whether humans review your AI outputs, how they’ll use your data, and what protocols are in place if there’s a data leak or error.

The concept car demo

You’ve probably seen this move before, even if you didn’t realize it at the time. The demo looks slick. The interface is clean. The presenter clicks through a flawless sequence of actions that seem to anticipate your needs before you even speak them. But here’s the problem: it’s not real.

What you’re seeing isn’t a product. It’s a concept car: a beautiful, limited-function prototype designed to impress investors, not to solve real-world problems in your law firm. Behind the scenes, it’s often held together with scripted workflows, placeholder data, and manually triggered responses. Sometimes it’s not even connected to the backend systems it claims to support.

These smoke and mirrors demos are more common than most firms realize. You don’t find out until much later, usually after you’ve signed a contract or started onboarding, that the product isn’t fully built, doesn’t integrate with your tools yet, or is missing core features.

In your next demo, ask the salesperson to walk you through a live, unscripted task using a real-world scenario, not their cherry-picked use case. If they stall or redirect, they might be selling you an idea, not a product. You should also ask them for references, real people in the legal industry who are actively using their product.

They market a “set it and forget it” AI solution

AI tools need training, tuning, and a bit of babysitting—especially in a field like law where nuance matters and a bad suggestion isn’t just annoying, it’s a liability. But that’s not always how the pitch goes. Some legaltech salespeople serve AI up like a plug-and-play miracle: sign here, onboard in a day, and suddenly your intake, marketing, research, and reporting are all handled by your new AI assistant. A tiny learning curve. No friction.

Of course, the moment your team starts using it, reality will hit. The AI will likely spit out boilerplate garbage that someone has to clean up. It might misunderstand your practice areas. It tries to automate workflows that aren’t broken.

AI should be sold as. a system that needs to be integrated, tested, and adjusted over time. Any vendor who tells you their AI “just works” is either overselling or doesn’t understand how their own product fits into a legal environment.

Surface-level case studies

I’ve seen this one repeatedly. The vendor you’re considering has what looks like an eye-popping case study. Their AI tool helped a tiny law firm—a sole practitioner— rank #1 for “chicago car wreck lawyer,” “chicago bicycle injury lawyer” and “chicago motorcycle lawyer.” At first glance, that seems impressive. After all, Chicago is a major metropolitan area with lots of competition.

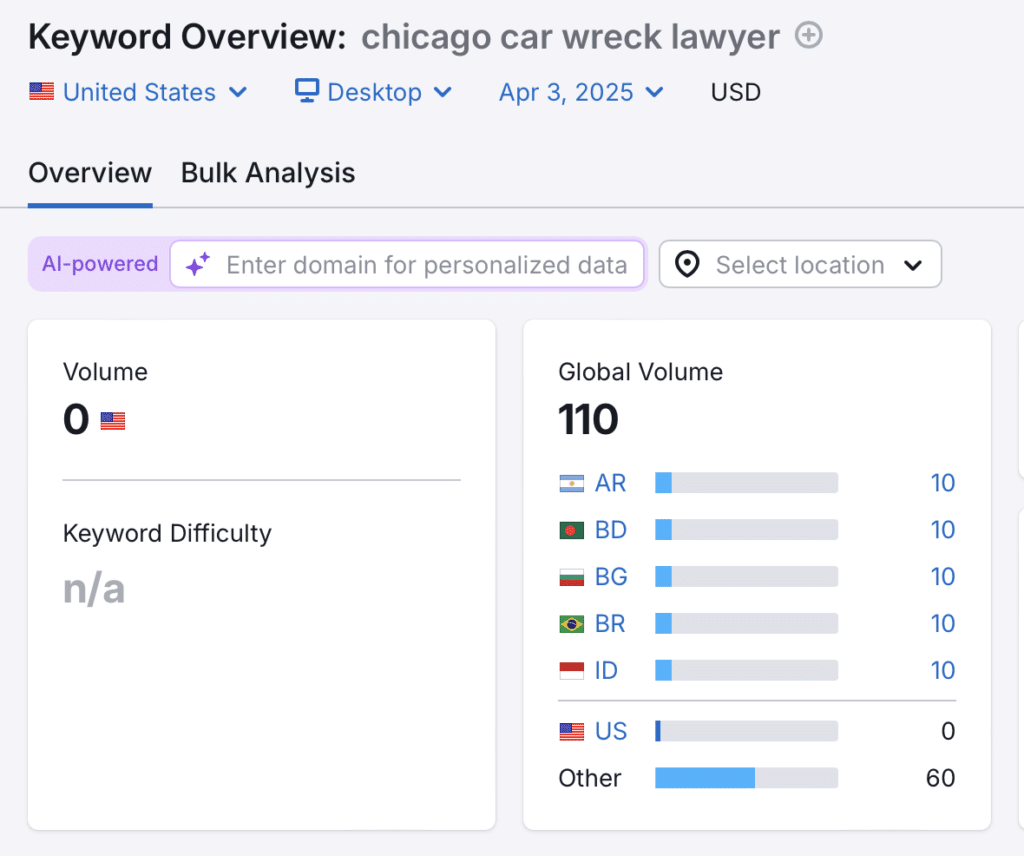

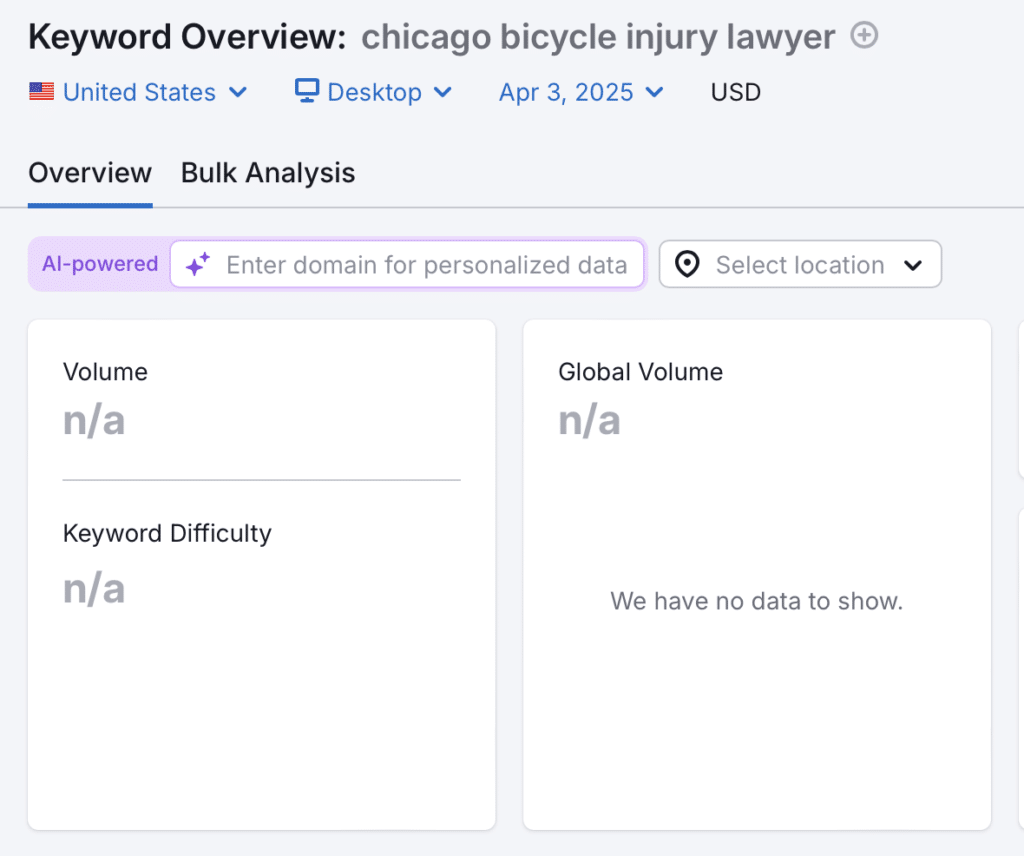

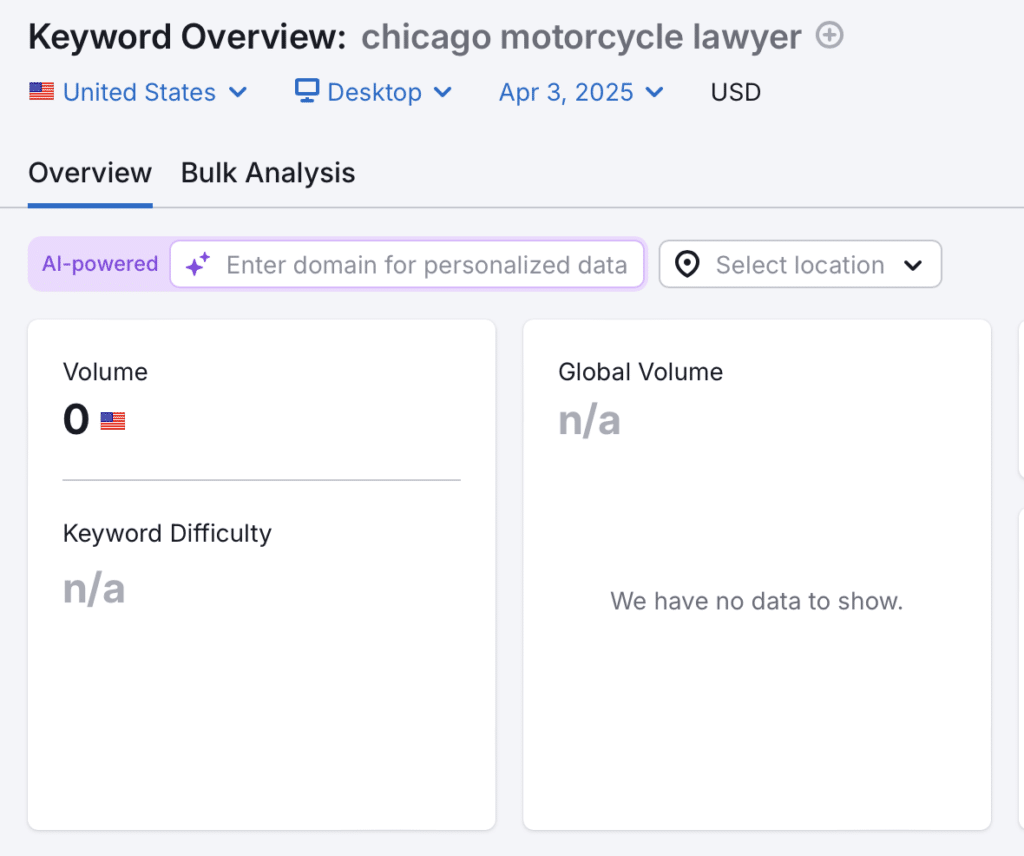

But, if you dig into those keywords, it’s actually an old-school SEO grift. The salesperson is cherrypicking terms that look impressive, but aren’t. Let’s look at those keywords’ performance in more detail.

No one is searching for these terms. For context, 480 people each month search for “chicago motorcycle accident lawyer” and another 50 look up “best motorcycle accident lawyer in chicago.” People are looking for motorcycle accident lawyers in Chicago, but not using the case study’s hyped-up keywords.

If someone does happen to type in “chicago motorcycle lawyer,” that sole practitioner is going to be the first name to show up in the organic results. But, that lawyer is waiting for luck to strike. And that keyword is not making their phone ring off the hook.

When you see a case study like this, trust but verify. Ask the salesperson about the keyword’s volumes and difficulty. Ask how many leads that lawyer got that were attributable to their tech solution.

The checklist (Or, how to cross-examine a legaltech salesperson)

Want to separate the serious players from the snake oil? Ask them these questions. Watch what happens.

Strategy and workflow

- How does your tool integrate with Clio, PracticePanther, or our existing case management system?

- What are the most common workflows legal clients use with your product?

- What internal roles or team structures do you typically work with during implementation?

- Do we need to reconfigure our current processes to make your tool work?

Data and compliance

- Does your system store or transmit client data? Where and how is it encrypted?

- Do you use third-party contractors, either domestic or offshore?

- Are any third parties you work with bound by non-disclosure or confidentiality agreements?

- How do you handle privilege, confidentiality, and regulatory compliance?

- If your tool uses generative AI, how do you prevent hallucinations or fabricated outputs?

- What steps have you taken to reduce or eliminate bias in your AI models?

Technology and architecture

- What type of AI do you use? (Machine learning? Large language models? Rule-based logic?)

- Who built and trained your AI models, and what was the training data?

- Do you use any of my firm’s or clients’ information to train your AI models?

- Do we retain ownership over outputs created using your platform?

- What are your protocols if there’s a service outage or data breach?

Support and logistics

- Who is responsible for implementation and ongoing support?

- What does your onboarding process look like, and how long does it take?

- How many law firms of our size and practice area have successfully adopted your solution?

- How often do you update your product, and how are those updates communicated?

If a salesperson fumbles here, or says they’ll “get back to you” more than once, it’s a sign they’re not ready to support the complexity of your firm.

Good tech is often boring

The best legaltech tools? Honestly, they’re a little boring. They help you:

- Reduce errors and manual entry

- Streamline routine tasks

- Maintain compliance

- Surface the right information at the right time

They don’t reinvent your practice overnight. They fit into your practice. Good tech extends your capabilities. It doesn’t replace your expertise.

The same was true in SEO. The firms that succeeded long-term weren’t the ones chasing hacks—they were the ones investing in clean, sustainable, white-hat strategies. Similarly, great AI doesn’t cut corners. It respects the realities of law firm life.

So if it feels too shiny to be real, it probably is.

A human-centered test for AI tools

Before you adopt any tech—especially AI—ask this:

“Will this help us deliver better outcomes for our clients while making our team’s lives easier?”

If the answer is “maybe,” or “only if we completely change how we work,” keep shopping. Legaltech should start with legal problems, not tech solutions. Don’t let someone reverse-engineer your firm into a product demo.

LaFleur: A little skepticism is a good thing

Law firms are the new frontier for AI sales. That means you’re going to get pitched. A lot. Use this guide to stay grounded. Ask hard questions. Demand clarity. And remember that tech—even the most advanced AI—is just a tool. The value comes from how well it supports the people who use it.

The future of law isn’t about robots replacing lawyers. It’s about lawyers who know how to use the right tools, and how to spot the wrong ones.